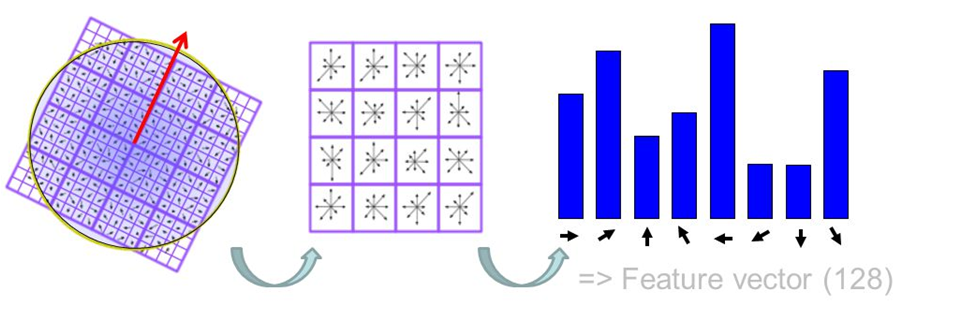

Sift features

Scale - Invariant feature transform

-

Invariant to transformation

-

Features based on image’s gradients

-

It produces a dictionary of visual words

-

with size 128xN

N is the size of the dictionary.

-

Each word is a histogram of sift descriptors

(eg. kitchen, store, etc.)

-

They were used for the feature extraction of Bag of Sift and Spatial Pyramid.

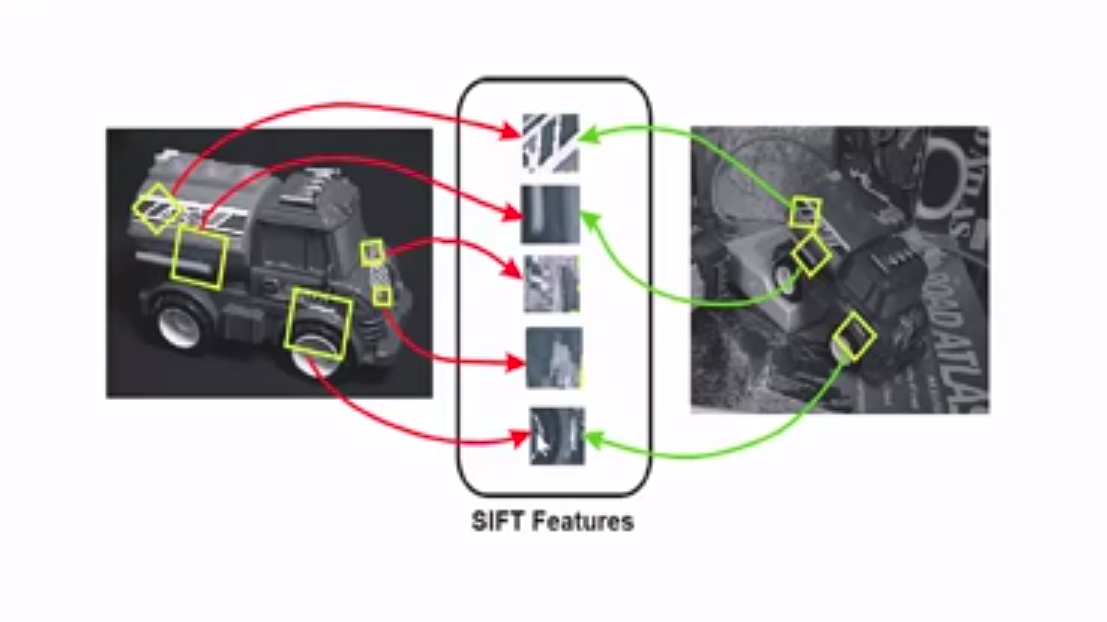

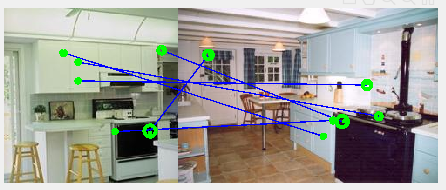

SIFT features between 2 images with the same scene

Different color spaces

The color spaces with more than 1 channel had a different approach than the grayscale color:

-

Concatenate the color channels into a 2D matrix

-

Use vl_phow

-

Dense SIFT features

-

Extract SIFTs from all channels separately

Not much improvement with this method

-

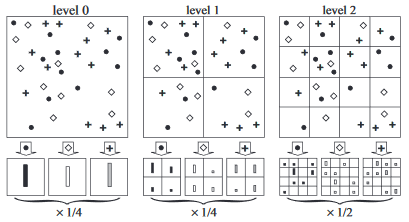

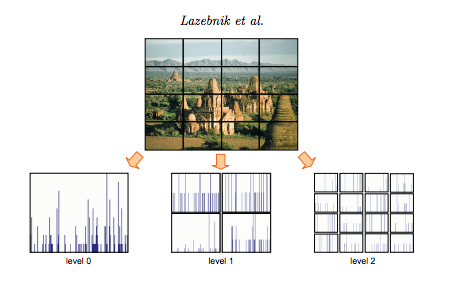

Spatial Pyramid

- Collection of orderless feature histograms

- Each level consists of a grid with histograms

- Histograms are created by the local SIFT descriptors on each quadrant

- For each level a weight is applied

Additional scene recognition method:

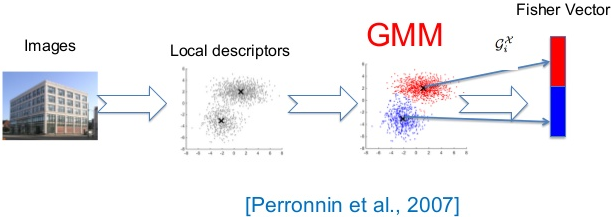

Fisher Encoding

Introduced in Image Classification with the Fisher Vector: Theory and Practice (Perronnin et al. 2007)

Build upon the Bag Of Visual Words method

Fisher Vocabulary:

- Extract SIFT descriptors from each image.

- Apply Gaussian Mixture Model (GMM) to the obtained features.

- Instead of clustering

- returns the means, covariances, priors that are used as a vocabulary

Fisher Vector:

- Extract SIFT descriptors

- Compute the fisher vector of each image by using their SIFT features and the already computed vocabulary

- Each vector represents an image

Comparison with BoS:

Advantages:

- It can be computed with much smaller vocabularies

Disadvantages:

- Takes more storage

- (2D+1)N –1

Steps:

Steps for SIFT extraction

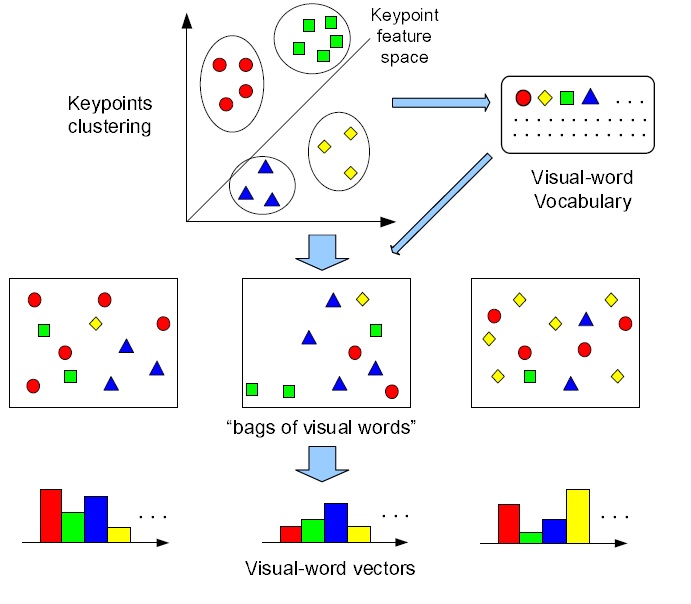

Step 1: Obtain the set of bag of features

build_vocabulary.m

-

get images

-

extract sift features from images

-

get descriptors from extracted features

-

cluster the descriptors

will find similar features in each image and create visual words for each of it

-

obtain dictionary with visual words.

Image illustrating the process of creating a vocabulary of visual words

Step 2: Obtain the bag of features for an image

get_bag_of_sifts.m

- extract sift features of the image

- get the descriptor for each point

- match the feature descriptors with the vocabulary of visual words (vocab.mat)

- build the histogram with the features descriptors

it will be created with the frequency of each feature in an image each feature will correspond to a visual word in the dictionary

- the visual words with the highest frequency will is the class of that image (prediction)

visual words -> a set of numbers representing a feature

Steps for Spatial Pyramid

spatial_pyramid.m

-

get images

-

extract sift features from images

-

get descriptors from extracted features

-

find the minimum distance of the the extracted features and the ons from the already computed vocabulary

D = vl_alldist2(vocab',features)

[~,ind] = min(D);. -

construct a histogram with those values.

It will be the histogram with SIFT features for Level 0 of the pyramid.

-

Create a matrix with the total levels of the pyramid

6.1 Each level will have a number of quadrants

6.2 Each quadrant will be represented with a histogram of its SIFT features.

6.3 Then each level will have those histograms concantated into a row, for the pyramid.In will result into a bigger histogram

-

Apply the appropriate weight to each level

Classification

1. kNN

2. SVM

- useful lecture: https://youtu.be/iGZpJZhqEME

Results

Bag of Sift

kNN

SVM

Spatial Pyramid

kNN

SVM

Fisher Vector

kNN

SVM

Conclusion

- The less the step size the slower and more memory MATLAB was using

- Spatial Pyramid gave good results till level 2 with RGB color space

- After level 2, not really better results, much more computational power

- Feature Step Size of 5 seemed to worked fine with all methods

- Fisher Vector method worked better with smaller vocabulary

- kNN classifier was really slow in comparison with SVM

- Too much data